What Is GPU Computing and How is it Applied Today?

GPUs, or graphics processing units, are best known for their capability to accelerate the rendering of 3D graphics in video editing and gaming. However, GPUs and GPU computing has become more prevalent in other use cases, such as deep learning and machine learning, data science, computational finance, and manufacturing.

#What is a GPU?

A GPU, or a graphics processing unit, often referred to as a graphics card, is a specialized microprocessor, a computer chip originally designed to accelerate graphics rendering used for heavy processing tasks like video editing, images, animation, gaming, crypto mining and to make AI videos. For example, GPUs have become essential for enhancing the performance of animated video makers, speeding up the creation of captivating visuals.

#What is GPU computing?

GPU computing is the use of a graphics processing unit (GPU) to perform highly parallel independent calculations that were once handled by the central processing unit (CPU). GPU computing offloads the processing needs from the CPU to achieve better rendering via parallel computing.

#What is GPU cloud computing?

GPU computing in the cloud takes away the need to buy and maintain expensive hardware on-premises. Cloud computing is an on-demand computing resource where servers are managed elsewhere and paid for on the go only for the resources required. Instead of buying and maintaining a physical server, it has brought flexibility and agility to businesses.

Much of GPU computing work is now done in the cloud. Various companies offer on-demand services with a range of GPU types, configurations, and workload needs, both bare metal cloud and virtual machine instances. For example, larger enterprise-level cloud providers include Amazon Web Services (AWS), Google Cloud, and Microsoft Azure, or cloud infrastructure providers for small -and medium-sized companies like Cherry Servers.

#History of GPU computing

Traditionally, GPUs have been used to accelerate memory-intensive calculations for computer graphics like image rendering and video decoding. These problems are prone to parallelization. Due to numerous cores and superior memory bandwidth, a GPU seemed to be an indispensable part of graphical rendering.

While GPU-driven parallel computing was essential to graphical rendering, it also seemed to work real well for some scientific computing jobs. Consequently, GPU computing started to evolve more rapidly in 2006, becoming suitable for a wide array of general purpose computing tasks.

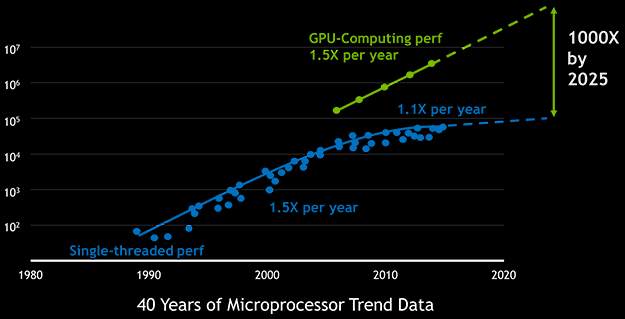

Existing GPU instruction sets were improved and more of them were allowed to be executed within a single clock cycle, enabling a steady growth of GPU computing performance. Today, as Moore’s law has slowed, and some even say it’s over, GPU computing is keeping its pace.

Image 1 - Nvidia Investor Day 2017 Presentation. Huang’s law extends Moore’s law - the performance of GPUs will more than double every two years.

#CPU vs GPU comparison

Architecturally, the difference between a CPU vs GPU is that a CPU is composed of a few cores with lots of cache memory that can handle few software threads at the same time using sequential serial processing. In contrast, GPU architecture is composed of thousands of smaller cores that can manage multiple threads simultaneously.

Even though a CPU can handle a considerable number of tasks, it wouldn't be as fast as GPU doing so. A GPU breaks down complex problems into thousands of separate tasks and works through them simultaneously.

CPU is much better for handling more generalist functions as it runs processes serially; therefore, it is good at processing one big task at a time. On the other hand, GPU runs processes in parallel and, thus, is better at processing several smaller tasks at a time.

GPU vs CPU processing. Source: towardsdatascience.com

GPU vs CPU processing. Source: towardsdatascience.com

#GPU computing benefits and limitations

A GPU is a specialized co-processor that, as with many other things, comes with its benefits and limitations, excellingexcelings at some tasks and is not so good at others. It works in tandem with a CPU to increase the throughput of data and the number of concurrent calculations within the application.

So how exactly does GPU computing excel? Let’s dive into GPU benefits and limitations.

#Arithmetic Intensity

GPUs can cope extremely well with high arithmetic intensity. The algorithm is a good candidate for a GPU acceleration, if its ratio of math to memory operations is at least 10:1. If this is the case, your algorithm can benefit from the GPU’s basic linear algebra subroutines (BLAS) and numerous arithmetic logic units (ALU).

#High Degree of Parallelism

Parallel computing is a type of computation where many independent calculations are carried out simultaneously. Large problems can often be divided into smaller pieces which are then solved concurrently. GPU computing is designed to work like that. For instance, if it is possible to vectorize your data and adjust the algorithm to work on a set of values all at once, you can easily reap the benefits of GPU parallel computing.

#Sufficient GPU Memory

Ideally your data batch has to fit into the native memory of your GPU, in order to be processed seamlessly. Although there are workarounds to use multiple GPUs simultaneously or streamline your data from system memory, limited PCIe bandwidth may become a major performance bottleneck in such scenarios.

#Enough Storage Bandwidth

In GPU computing you typically work with large amounts of data where storage bandwidth is crucial. Today the bottleneck for GPU-based scientific computing is no longer floating points per second (FLOPS), but I/O operations per second (IOPS). As a rule of thumb, it’s always a good idea to evaluate your system’s global bottleneck. If you find out that your GPU acceleration gains will be outweighed by the storage throughput limitations, optimize your storage solution first.

#GPU Ccomputing applications and use cases

GPU computing is being used for numerous real-world applications. Many prominent science and engineering fields that we take for granted today would have not progressed so fast, if not GPU computing.

#1. Deep Learning

Deep learning is a subset of machine learning. Its implementation is based on artificial neural networks. Essentially, it mimics the brain, having neuron layers work in parallel. Since data is represented as a set of vectors, deep learning is well-suited for GPU computing. You can easily experience up to 4x performance gains when training your convolutional neural network on a Dedicated Server with a GPU accelerator. As a cherry on top, every major deep learning framework like TensorFlow and PyTorch already allows you to use GPU computing out-of-the-box with no code changes.

#2. Drug Design

The successful discovery of new drugs is hard in every respect. We have all become aware of this during the Covid-19 pandemic. Eroom’s law states that the cost of discovering a new drug roughly doubles every nine years. Modern GPU computing aims to shift the trajectory of Eroom’s law. Nvidia is currently building Cambridge-1 - the most powerful supercomputer in the UK - dedicated to AI research in healthcare and drug design.

#3. Seismic Imaging

Seismic imaging is used to provide the oil and gas industry with knowledge of Earth’s subsurface structure and detect oil reservoirs. The algorithms used in seismic data processing are evolving rapidly, so there’s a huge demand for additional computing power. For instance, the Reverse Time Migration method can be accelerated up to 14 times when using GPU computing.

#4. Automotive design

Flow field computations for transient and turbulent flow problems are highly compute-intensive and time-consuming. Traditional techniques often compromise on the underlying physics and are not very efficient. A new paradigm for computing fluid flows relies on GPU computing that can help achieve significant speed-ups over a single CPU, even up to a factor of 100.

#5. Astrophysics

GPU has dramatically changed the landscape of high performance computing in astronomy. Take an N-body simulation for instance, that numerically approximates the evolution of a system of bodies in which each body continuously interacts with every other body. You can accelerate the all-pairs N-body algorithm up to 25 times by using GPU computing rather than using a highly tuned serial CPU implementation.

#6. Options pricing

The goal of option pricing theory is to provide traders with an option’s fair value that can then be incorporated into their trading strategies. Some type of Monte Carlo algorithm is often used in such simulations. GPU computing can help you achieve 27 times better performance per dollar compared to CPU-only approach.

#7. Weather forecasting

Weather forecasting has greatly benefited from exponential growth of mere computing power in recent decades, but this free ride is nearly over. Today weather forecasting is being driven by fine-grained parallelism that is based on extensive GPU computing. This approach alone can ensure 20 times faster weather forecasting models.

#GPU computing in the cloud

Even though GPU computing was once primarily associated with graphical rendering, it has grown into the main driving force of high performance computing in many different scientific and engineering fields.

Most of the GPU computing work is now being done in the cloud or by using in-house GPU computing clusters. Here at Cherry Servers we are offering Dedicated GPU Servers with high-end Nvidia GPU accelerators. Our infrastructure services can be used on-demand, which makes GPU computing easy and cost-effective.

Cloud vendors have democratized GPU computing, making it accessible for small and medium businesses world-wide. If Huang’s law lasts, the performance of GPUs will more than double every two years, and innovation will continue to sprout.

Harness the power of GPU acceleration anywhere. Deploy CUDA and machine learning workloads on robust hardware tailored for GPU intensive tasks.