What is DevOps? An Intersection of Culture, Processes and Tools

#What is DevOps and why should you burn no bridges?

Back in the days system administrators had mastered the art of avoiding software developers and rejecting system changes, unless they were perfect. People spoke that when developers had their worst nightmares, they dreamt of a spooky unshaved admin yelling at them because of some quirky bug in their code. There were even rumors of software so refined and polished that it has never been rolled out, since operations engineers were afraid it had become too pure for us, mere mortals.

When Uncle Bob with his fellow developers formulated Agile manifesto, it was clear as day that this situation was about to change. Their aim was to stimulate better ways of developing software by doing it and helping other do it. And that they did. The statements created at the Lodge in Utah, like 'individuals and interactions over processes'and tools' or 'responding to change over following a plan' formed the basis for a lightweight software development movement that grew up into DevOps revolution a decade later.

DevOps is often defined as a culture of collaboration between operations and development engineers working together in the entire service lifecycle, from product design through the development process to production operations. As Brian Dawson from Cloud Bees once put it, DevOps is a trinity of people and culture, process and practice, tools and technology. It shatters all silos and builds a solid bridge between development and operations on these three levels. Collaboration at its finest.

At its core DevOps aims at building better quality software quicker. Its promise is invaluable for developer-driven businesses: faster time to market, higher ROI, greater user satisfaction, increased efficiency and other perks. It’s no wonder why, according to Statista, only 9% of respondents in 2018 reported they have not adopted DevOps and have no plans of doing so.

#DevOps as a Culture

Business culture is a predominant mindset spanning across an organization. It’s the way we collectively think about our mutual efforts in a company. Obviously, an organization needs to be well-aligned around its vision statement, goals and processes to act effectively and be profitable. DevOps culture is centered around collaboration. In a team where everyone focuses on the same goal and communicates extensively no barriers, tensions or silos exist. Forget the blame game – product owners, developers, QA and IT operations – all work hand in hand towards delivering higher quality releases at a faster pace.

#Teamwork at its best

In the old days ‘devs’ were all about creating new features despite of their quality, while ‘ops’ valued stability more than anything. Today DevOps teams work in small multidisciplinary groups, tightly coupled with the applications they support. Such groups work autonomously and take collective accountability for how customers experience software. Working together helps everyone stay in line with the same goal of delivering quality software to customers. The team relies on a product-first mindset, where the needs of real users are proactively collected and acted on. By the end of a day, it’s not your Product Director, but your customers who are using your software, so putting them on a pedestal is most often a good idea.

#The feedback loop

Aright, I’ve tricked you a little bit. DevOps is not at all about getting code to production, but rather getting ideas into production and failing quick. This willingness to fail fast is one of the more difficult aspects when implementing DevOps culture. People often fall into a trap of getting involved with their ideas too much. They may get too personal and start to avoid failure at all cost. The longer your team works on an idea, the more this tendency increases. This is the main reason of why waterfall software development model is cracked. By failing quick you lower the cost of failure and it becomes a mere learning experience. There’s a well-established feedback loop to verify your ideas and find out if they are worth further investments. Activities like daily scrums, code reviews, unit tests and continuous monitoring helps you adapt to constant change.

#Continuous learning

DevOps teams focus on competencies instead of roles. Roles are rigid and clunky, while competencies can be acquired on the go. It’s paramount to develop a muscle to think beyond your own area of expertise and don’t stop learning. It helps people understand each other’s functions better and stay up to date in this ever-changing tech world. And when you have your next epiphany, don’t shy away from sharing it with your colleagues.

#DevOps as a Business Process

As it was put in the DevOps Handbook, DevOps can be distilled into three main patterns when it comes to business processes. The first of which is systems thinking, meaning that performance of the entire system is always above the performance of a specific department. The work from development to operations should be streamlined in small batches, trying to keep work in progress limited and increase change frequency. Next, there is a so called shift-left feedback loop, which requires you to integrate automated testing into every development and operations activity. This way you can shorten and amplify your feedback loop to make changes constant and safe. Finally, your team needs to practice systematic experimentation, which is basically a fail fast mentality that I’ve mentioned earlier. You can embrace it by allocating time to improve daily work, rewarding the team for taking risks and injecting faults to your system to increase its resilience.

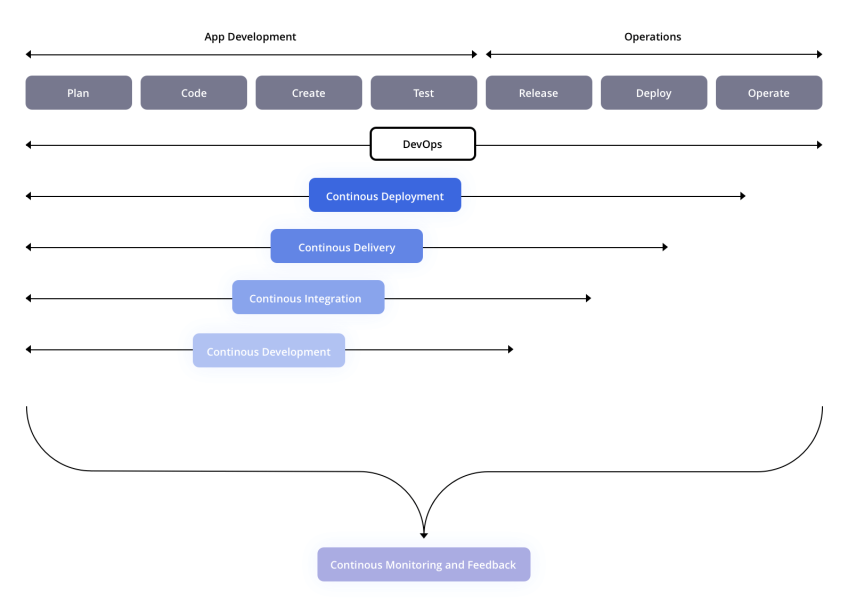

Having these three DevOps patterns in mind let’s now unwrap DevOps software development lifecycle phase by phase. Every stage is focused around agility and automation with an aim to shorten software delivery process and increase its quality.

#Continuous integration - always work with the latest code version

Continuous Integration (CI) is a development practice of regularly merging their code changes into a shared repository where those updates are automatically tested. Code needs to be merged at least once a day. Each code check-in is then verified by an automated build to detect conflicting code early on. This way bugs can be detected quickly and without much effort. The most up-to-date and validated code is always readily available to developers.

So, you come to work and pull code into your private workspace. The coffee is being consumed and the work happens. When you are done, you commit your code changes to the central repository and Continuous Integration server takes it from here. The server is continuously monitoring for incoming code commits. When it receives one, it builds the system and runs unit and integration tests. Deployable artifacts are released for testing, the build version is assigned to your code and the whole team is informed about the successful build. If your build or tests fails, everyone is alerted and you have to fix the issue ASAP, so the process could continue.

Each developer is responsible for doing a complete build and passing all the tests. The main point of CI is that everyone is working on a known stable code base. Therefore, if your build fails, nothing has a higher priority than fixing the build.

#Continuous delivery - build, test and package your code to the stage

Continuous Delivery (CD) is the next logical step from CI. It is the practice of frequently building, testing and packaging code changes for a release into production. CD automates the release process so that new builds can be released at the click of a button. Such practice accelerates time to market and allows you to obtain user feedback more quickly.

With CD your code is not released straight to production, so you can still do manual user testing in the staging environment. This can be very handy if your business operates in a sensitive domain, like telecom and medical, where regulations require extensive testing to be made. Some of your customers may not want continuous updates to their systems. Finally, there may be some edge cases where code releases cannot be easily verified with automation – building automated tests can simply take unreasonably long time and still be error prone.

#Continuous deployment - why stage? Let’s go live, baby!

In a Continuous Deployment process, every validated build is automatically released. Once the developer commits his code change, there is zero manual intervention before it gets to production. You can now build even smaller and more frequent releases. This way you accelerate time to production, reduce complexity by not having a staging environment and no longer need to schedule your releases. Your feedback loop gets shorter, since you now get user feedback quicker.

Continuous Deployment is the golden goose of DevOps, but it is best applied after your DevOps team has nailed down its processes. For continuous deployment to work well, organizations need to have a rigorous and reliable automated testing environment. If you are not there yet, start with Continuous Delivery to have that extra safety step and transition gradually.

#Continuous monitoring - tomorrow’s app today

You can’t manage what you can’t measure. Continuous Monitoring will help you keep your CI/CD pipelines flowing smoothly, giving you more confidence in your deployments and allowing you to react to changing customer needs even faster. By monitoring your system and its environment you will know immediately whether your application is performing as desired, or if its performance has degraded. You can then react accordingly.

When implementing continuous monitoring the right way you need to track several layers of your system at once:

- You want to track development milestones to know how effectively your team is operating and how well is your DevOps adoption process going.

- You want to feel the pulse of your infrastructure health, like server uptime, performance and available resources.

- You want to be alerted ASAP when your code build fails for not to jam your CI/CD pipeline.

- You want to see all the errors and exceptions in your application log in real time and make them traceable by your code commit tag.

- You want to spot any code vulnerabilities, whether that‘s a third party dependency issue or insecure coding practice of your developers.

- You want to log your user activity for feature improvements and scalability purposes.

A lion’s share of your monitoring process should be automated not only to provide you with continuous feedback in real time, but also to allow your system to take proactive actions. You don’t want to be reacting manually each time something happens. Life’s more beautiful when you have clearly defined your triggers and created what-if automation rules to ensure self-improvement of your system.

#Virtualization and containerization – let’s go micro-services

Virtualization is basically just another abstraction layer. It abstracts away physical hardware components to create aggregated pools of resources. Speaking about server virtualization, the main goal is to achieve greater computing density by overbooking hardware resources. It means you trade off the consistency of your workloads for cheaper hardware. It’s the main reason of why Virtual Machines have thrived for the last decade, despite of the noisy neighbor problem and some inherent security vulnerabilities they possess.

DevOps pushes virtualization a step further by introducing containerization. Docker containers have similar resource isolation and allocation benefits like VMs, but containers are more portable and efficient. Each container packs up your code along with its dependencies to run it as a single process. Multiple containers can run on the same host machine and share the same OS kernel.

Containers simplify the CI/CD pipeline by a substantial margin. They make development, testing and production environments consistent. This not only eliminates “it works on my machine” problem, but also makes collaboration between teams easier. Containers make it dead simple to deliver constant updates to your application. Just kill one of your containers and spin up a new one leaving other parts of your micro-services application intact. Finally, containers allow you to mix different frameworks or even programming languages altogether, thanks to their platform agnostic nature. If your system is past the minimum viable product stage, I strongly recommend containerizing your application to level up your business agility.

#DevOps as a Set of Tools

I know I know, DevOps is not primarily about tools, but it’s no good to pretend they don’t exist either. The landscape of DevOps cloud-native technologies is vast, sheltering a number of tools for each DevOps process phase. I would briefly go through some of the most important DevOps technologies that you may want to try out with your team.

- Planning and collaboration. You need a tool to help you track the progress of your job when working on a project. Technologies like Jira, Asana and Trello are all worth considering.

- Source code management. Storing your code and sharing it with colleagues is essential part of your DevOps culture. Github, Gitlab and Bitbucket can help you here.

- Package management. Containers are de-facto building blocks of a modern DevOps micro-services application. You would probably stick with Docker, due to its matureness and wide adoption, but rkt is out there as well.

- CI/CD. Automated code integration, building and testing processes are at the heart of every DevOps culture. Jenkins, Travis or Gitlab can help you establish this in your organization.

- Configuration management. Being a DevOps engineer you want to ensure that your infrastructure is deployed and managed reliably all the time. Tools like Ansible, Chef, Puppet or Saltstack do precisely that.

- Infrastructure management. Treat your infrastructure as code to get resources on demand from your chosen cloud provider. Don’t bother looking further than Terraform. It has outdistanced all its competitors by far.

- Scheduling and orchestration. Group containers, load balance them, self-heal your system, auto-scale it, manage DNS and do many other incredible things with Kubernetes out of the box. In case you don’t need that much control and complexity, you can get away with Docker Swarm as well.

- Continuous Monitoring. Smart DevOps engineers improve their applications and tool-chains before problems even show up. Prometheus, Nagios and New Relic can be your eyes and ears therein.

I’ve just barely touched the most popular DevOps tools here, but there are numerous DevOps technologies available in the market. As most of these tools are open source and completely free of charge, you better have a solid reason to choose a proprietary vendor. Open source software not only prevents vendor lock-in and unnecessary expenses, but also enhances overall quality and security of your application by giving visibility into the source code of your stack.

#On a Final Note

I'm sure the next time your colleague asks you what is DevOps, you will confidently claim it's not just about culture, processes or tools, but rather it's a combination of these three facets. DevOps shatters silos and helps you build a solid bridge between development and operations on these three levels. Collaboration at its finest.

Starting at just $3.24 / month, get virtual servers with top-tier performance.