How to Deploy Kubernetes on Bare Metal: Step-by-Step

Bare metal here refers to an empty machine with no applications or operating system running on it. It gives you access to all the machine's resources so you can configure them as you wish without any virtualization layers. This is beneficial for applications that require high performance and require dedicated service.

Deploying Kubernetes to bare metal provides a lot of flexibility. You have direct access to different parts of the hardware resource to do whatever you want with it. Although this requires experienced engineers to set it up, many companies value this option.

This tutorial will guide you through the necessary preparations to run Kubernetes on bare metal, installing the required components, deploying a sample application, and checking the result. You could use a tool like Sidero to automate all these, but that would just be like using a cloud service. It’s better to learn how to set it up yourself.

#What is bare metal Kubernetes?

You can run Kubernetes locally, virtually, or on a Kubernetes service. The problem with this is you don’t have full access to all the machine's resource capabilities. This is where bare metal Kubernetes come in. Running Kubernetes on bare metal gives your Kubernetes clusters and your containers direct access to the resources of the bare metal machine.

Setting up the cluster this way is beneficial for workloads that require high-performance operations. Some operations that can be done with this kind of setup are high-performance computing, AI/ML workloads, large-scale database operations, etc.

If you are thinking of building your private cloud infrastructure, bare-metal Kubernetes is a great option.

Also read: OpenShift vs Kubernetes on Bare Metal

#Prerequisites

- One bare metal machine with Ubuntu 22.04 installed

- SSH access to servers

- Understanding of Kubernetes

Build and scale your self-managed Kubernetes clusters effortlessly with powerful Dedicated Servers — ideal for containerized workloads.

#How to deploy Kubernetes on bare metal

#Step 1: Setting up the bare metal infrastructure

Install containerd:

sudo apt install -y containerd

Configure containerd to use SystemdCgroup:

mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.toml

Edit /etc/containerd/config.toml and set SystemdCgroup = true.

This is important because Kubernetes requires all its components, and the container runtime uses systemd for cgroups. You can use the following command to do it:

sudo sed -i 's/ SystemdCgroup = false/ SystemdCgroup = true/' /etc/containerd/config.toml

Restart containerd:

systemctl restart containerd

Install necessary tools for Kubernetes:

We will install kubelet, kubeadm, and kubectl. Do this by running the following commands:

sudo swapoff -a # to disable swapping temporarily

sudo sed -i '/[[:space:]]swap[[:space:]]/ s/^/#/' /etc/fstab # maintain swap disabled after reboot

sudo apt-get update

# apt-transport-https may be a dummy package; if so, you can skip that package

# install the necessary dependencies

sudo apt-get install -y apt-transport-https ca-certificates curl gpg socat docker.io

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

# install kubelet, kubeadm

sudo apt-get install -y kubelet kubeadm

Check if all tools were installed properly: You can check the various versions that were installed by running the following commands:

kubelet --version

kubeadm version

Install kubectl binary with curl on Linux:

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl # install kubectl

kubectl version --client # check kubectl version

#Step 2: Initializing the control plane

Before we can use a machine we need to first make it either a control plane or a worker node In this section, you will learn how to initialize your server for Kubernetes. One server will be used as the control plane and the other as the worker node.

First, enable IP forwarding by running. In a Kubernetes environment, this is important for container-to-container and pod-to-pod communication across different nodes.

echo 1 | sudo tee /proc/sys/net/ipv4/ip_forward

To make this change persistent across reboots, edit /etc/sysctl.conf:

nano /etc/sysctl.conf

Add or uncomment this line:

net.ipv4.ip_forward=1

Then apply the changes:

sudo sysctl -p

Next, we need to initialize the Kubernetes control plane and set up the cluster with a specific pod network range. To do this for the control plane node, run:

sudo kubeadm config images pull

sudo kubeadm init

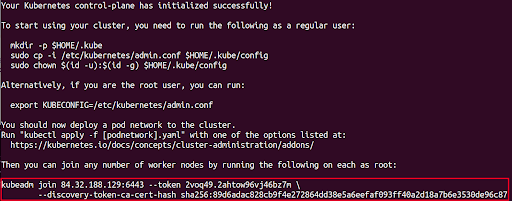

On successful execution, you should see something like the image below:

You will need the command provided to attach a worker node. Set up kubeconfig:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

To enable communication between the pods in your Kubernetes cluster, you need to install a network add-on. You can install Calico with the following command:

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

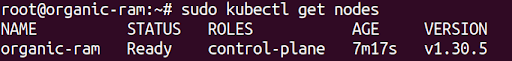

You should see your node in a ready state:

#Step 3: Set up the worker node

To start, run all the commands below. These commands were run in the previous section, so just copy and paste them into your terminal as they are.

sudo apt install -y containerd

mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.toml

sudo sed -i 's/SystemdCgroup = false/ SystemdCgroup = true/' /etc/containerd/config.toml

systemctl restart containerd

sudo swapoff -a # to disable swapping temporarily

sudo sed -i '/ swap / s/^/#/' /etc/fstab

sudo apt-get update

# apt-transport-https may be a dummy package; if so, you can skip that package

# install the necessary dependencies

sudo apt-get install -y apt-transport-https ca-certificates curl gpg socat docker.io

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

# install kubelet, kubeadm

sudo apt-get install -y kubelet kubeadm

echo 1 | sudo tee /proc/sys/net/ipv4/ip_forward

To make this change persistent across reboots, edit /etc/sysctl.conf:

nano /etc/sysctl.conf

Add or uncomment this line:

net.ipv4.ip_forward=1

Then apply the changes:

sudo sysctl -p

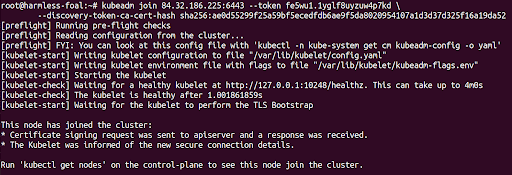

Now run the command that was provided to run attach the worker node you should see something like the following:

#Step 4: Deploying an application

After you have your Kubernetes cluster in place, you are set to deploy an application. In the samples for this tutorial, we are going to work on a simple NGINX server.

The application is managed using configurations referred to as manifests in the case of Kubernetes. These manifests define the desired state of your application, including how it should be deployed and exposed to the network. This will be done on the control plane.

Deployments

First, we’ll create a Deployment manifest.

This manifest specifies how many replicas of your application should run, what container image to use, and other details.

Here’s a Deployment manifest for our NGINX server:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

This configuration code above creates two replicas of the NGINX container. You can apply ttis configuration by running the following command:

kubectl apply -f deployment.yaml

This command tells Kubernetes to create and manage the deployment according to the specifications in the manifest.

Services

Next, we need to expose our application so that it’s accessible from outside the Kubernetes cluster.

This is done by creating a Service manifest.

Below is an example of a Service manifest for our NGINX deployment:

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

This manifest tells Kubernetes to expose the NGINX deployment on port 80, making it accessible via the network. To expose the service, run:

kubectl apply -f service.yaml

Now, your NGINX server is up and running, and accessible through the network.

#Verifying the deployment

Once your application is deployed, we can now ensure that everything is functioning correctly. This involves ingress controllers to manage external access to your services.

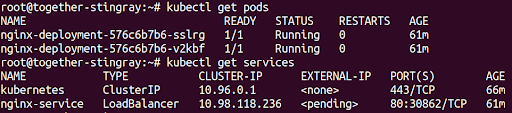

First, check the status of your pods to see if your pods are running

kubectl get pods

You should see two pods with the status "Running" for your NGINX deployment. Now check if your service is properly created:

kubectl get services

You should see your nginx-service listed, along with the NodePort it's using, like you see in the image below.

#Accessing your application

To access your NGINX server, you'll need to find the NodePort and the IP address of the worker nodes. You can then access the application in a web browser using *http://<node-ip>:30862*.

LoadBalancer service makes sense during the testing phase but in actual practice, there are chances that you would like to deploy an Ingress controller that would maintain access to your services from the outside.

Ingress controllers are reverse proxy and load balancers and act as the single entry in the cluster while directing the traffic to various services based on the request received.

For deploying the Ingress controller: install NGINX Ingress Controller with the following command:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.8.2/deploy/static/provider/cloud/deploy.yaml

Next, create a file named ingress.yaml with the following content:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-ingress

annotations:

kubernetes.io/ingress.class: nginx

spec:

rules:

- host: yourwebsite.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-service

port:

number: 80

Apply the Ingress resource with the following command:

kubectl apply -f ingress.yaml

Now, your NGINX application should be accessible through the Ingress controller. You can check the Ingress controller's external IP or hostname by running the following command:

kubectl get ingress

This should display your Ingress resource and its associated address.

Also read: How to secure Kubernetes cluster

#Conclusion

In this article, we explained how to prepare your infrastructure for bare metal Kubernetes installation, install various components, deploy a sample application, and finally, check the result of our efforts.

Even though this is a rather surface-level article, you might need a far deeper setting for the realistic application to work, this is good enough to get you started on the right track.

Harness the power of GPU acceleration anywhere. Deploy CUDA and machine learning workloads on robust hardware tailored for GPU intensive tasks.